AI is now smarter than the average human: What does this mean?

Claude 3 Opus scores 101 on an IQ test

Since ChatGPT took the world by storm, every week there has been a new announcement of the mindblowing capabilities of AI programs released by various companies, from reading and understanding handwritten maths equations, automated translation and dubbing of videos, conducting interviews of job candidates, generating high-quality videos from simple text descriptions, and much more.

If you haven’t been paying close attention, you’re making a mistake. You should subscribe to my AIIQ newsletter to stay informed.

Anyway, the latest news is that Claude 3 Opus (a recently released AI chatbot) has scored 101 on an IQ test. What should we make of that? The rest of this post contains my ramblings on different aspects of IQ and intelligence and artificial intelligence and how we should react to all this.

The average IQ is 100. The scoring in IQ tests is standardized so that IQ scores have a mean of 100 and a standard deviation of 15. This means that the average human will score 100 and that 68% of all humans will have an IQ between 85 and 115. This also means that only 2.1% of people in the world have an IQ above 130, and the fraction above 140 is 0.1%.

Is IQ a good measure of anything? This is a tricky question to answer. Some people casually use the term “IQ” synonymously with intelligence. Often they also assume that high IQ will result in high levels of achievement and success in life. Others think that IQ is a flawed, biased, Eurocentric measure with roots in scientific racism and eugenics. (See, for example, the book The Mismeasure of Man.) Which of these views is right?

I don’t know. I will instead make several statements, all of which are defensible, I believe. 1) Having a low IQ (i.e. below 80) almost guarantees that you cannot do intellectual/knowledge work and ensures that you can only get jobs that require minimally skilled work. 2) Having a high IQ is no guarantee of success. Having a higher IQ is somewhat correlated with higher success and wealth but it is neither necessary nor sufficient. Lots of people with high IQ do badly in life and lots of people with average or slightly above average IQ succeed wildly through other strengths (like, for example, hard work). 3) People who take pride in their high IQ and join high IQ societies like Mensa are pretentious bores.

AI going from IQ 80 to IQ 101 is significant. Because of all the problems with IQ measurement and its validity, it is not a good idea to pay too much attention to the exact IQ score of a person or an AI. However, a change from 80 to 100 is a step change and involves changing categories from “too dumb to do a skilled job” to “good enough”. In the “good enough” category, you can stop looking at IQ and start thinking of other abilities. For example, in humans, you would look for hard work, persistence, the ability to work in a team, communication skills, etc.

So the fact that Claude 3 Opus has scored 101 is significant. I think we’ll start seeing more of a real-world impact of these AI programs now.

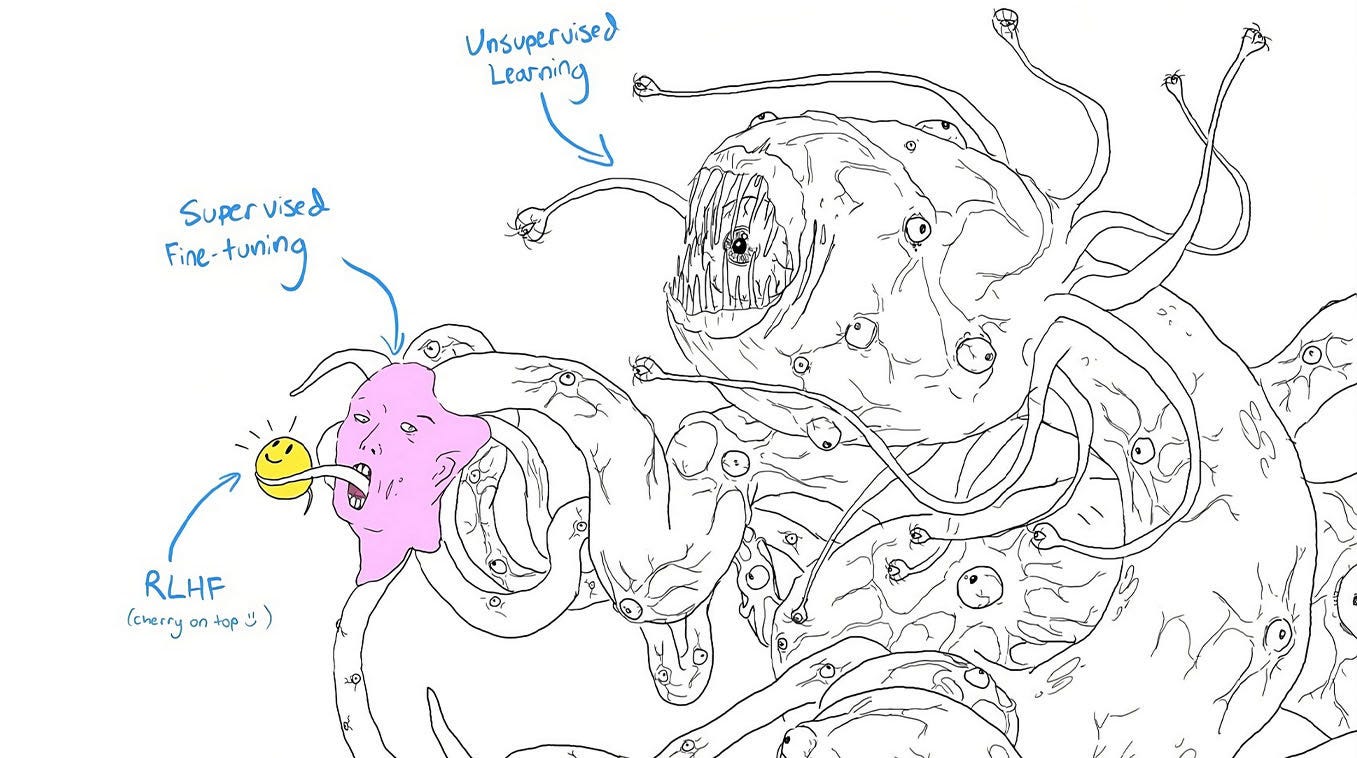

AIs are not Humans: All this talk of “average human” makes it seem like an AI chatbot is just like a person but this is a very misleading and dangerous misconception. These AIs are bizarre creatures and have capabilities and behaviours that can be very unpredictable and different from humans. An IQ of 101 means that the average capabilities are similar to that of an average human but the details can be very different. The AI can be vastly superior to humans in some areas (for example, breadth of knowledge) but can be staggeringly dumb in other areas (for example, they’re terrible at calculations). See for example “the jagged frontier”.

When you compare the capabilities of different groups of humans (e.g. humans from different countries) there are lots of similarities. The questions that are easy for one group tend to be easy for the other groups too and the questions that are hard for one group tend to be hard for the other groups too. However, the same is not true for Claude: the questions it got right were unrelated to the questions humans got right. The important takeaway is this: always be prepared to be surprised by the capabilities of AI programs. In both directions.

Knowledge vs IQ: Even before Claude 3 Opus, programs like ChatGPT, Google’s Gemini, and others were doing some very impressive things. How do you reconcile that with the fact that their IQ score is so low? The reason for this is simply that what they lack in IQ, they make up through breadth of knowledge (and confidence). Just as there are a lot of real people who have strong and confident opinions on every topic under the sun and they seem very smart at first until you start digging deeper and find that there is no depth there. In the case of humans, IQ doesn’t change much in a lifetime. But for AI, that can happen in months.

So how to prepare for an AI future? I think it is pretty clear that AI will cause big changes. But nobody can predict exactly what those changes will be, and when, and who exactly will be affected, and how. Anyone who makes confident predictions is either an idiot or a scammer. So how do we prepare for these unpredictable changes? I think the only thing we can do is to have a fast OODA loop: pay close attention to the latest developments, do quick experiments to understand different ways in which AI can be used in your work and life, try to disrupt yourself before competitors disrupt you, and most importantly, act and react fast.

Thanks for this article Loved it especially for a senior who sometimes finds new technology intimidating

Loved the article Navin and your comment about pretentious bores left me chuckling for a few minutes.