Today’s article is about the possible impact of ChatGPT on the (junior programmers in the) software industry. If you’re not interested in ChatGPT or the software industry, please skip this article. The next one will resume the usual kinds of topics.

Executive Summary

I thought ChatGPT would make our programmers smarter. But I’m seeing the reverse (at least in a certain section of the population).

In the last couple of weeks, I’m seeing that 90+% of job candidates are using ChatGPT to solve programming/SQL problems in online job interviews. Unfortunately, they’re copy-pasting wrong ChatGPT’s answers blindly, without even a minimal attempt at checking whether the answer is anywhere close to correct. (Details later in the article.)

It is almost guaranteed that employees within companies are also doing something similar and I worry about the effect on the code quality and rate of hard-to-find-but-dangerous bugs.

It is well known that for anything other than trivial programming questions, ChatGPT’s answers have subtle bugs which are not easy to detect unless you are an expert programmer yourself, or you have strong test cases (checking for lots of corner cases). I wonder how many programmers and programs in our industry fail to meet these criteria.

I hope that in one year I am proved wrong and everyone will have figured out how to use these new AI technologies correctly and safely. But for now, I’m worried.

Many of Our Engineers Were Already Bad

For the last 15+ years, the software industry has used a trivially simple programming question (fizzbuzz) to check whether a candidate applying for a software development job can actually program. If you’re not aware of this, you should check out that link. The program they’re asked to write is really trivial:

Write a program that prints the numbers from 1 to 100. But for multiples of three print "Fizz" instead of the number and for the multiples of five print "Buzz". For numbers which are multiples of both three and five print "FizzBuzz"

This usually comes as a surprise to most people: A large majority of our graduating CS or IT engineers are unable to write this program.

For the last 10+ years, we have also used fizzbuzz-like programs to filter candidates in campus tests, and our statistics are similar: 89% of CS/IT engineers are unable to write working programs for these questions.

No wonder NASSCOM has been complaining that 80+% of our engineering graduates are unemployable. (2006 story, 2019 story, nothing has changed in 15 years.)

When Dumb Programmers Meet a Bullshitter AI

Two months back, I noticed that over the course of a few weeks, the number of candidates who could successfully solve our fizzbuzz-like programs jumped from the usual 10-15% to 50+%. The 10-15% success rate had been more or less constant for 10+ years at least, so a change of this magnitude in such a short time can only be explained by ChatGPT.

A few of our customers are responding by disallowing the use of ChatGPT during the test. They are continuing with the old strategy. However, I think this is a mistake. I feel it is better to accept the fact that employees are going to use ChatGPT to do their job and hence the right strategy is to test the abilities of the candidates with ChatGPT.

As a result, we responded by making the programming question slightly harder (details in the appendix).

I have been monitoring the answers and here is what I’ve found so far:

75% of candidates are definitely using ChatGPT for this question, based on the type of bugs in the program (more details in the Appendix)

I almost sure that another 15% are also using ChatGPT (because I have a decade of experience with the kind of programs students and junior programmers write, and these programs are definitely don’t fit that pattern)

The candidates aren’t even bothering to try to understand the answer

The question gives a test case that the program must pass; the candidates are not even checking if that test cases passes. I would be surprised if any of these candidates are even bothering to compile and run the program.

The answers are horribly wrong: ChatGPT makes bizarre alien mistakes that no human programmer will make. But these candidates are submitting the code with those errors.

The worst statistic is this:

Until last year, I estimate that around 30% to 40% of the candidates would cheat and copy-paste someone else’s program. The remaining candidates were at least trying to write the program on their own. Even though most of them were failing, and only 10% got the correct answer, I still give them credit for trying. In the long term, that counts.

In the last few weeks, 90% of the candidates are copying ChatGPT’s answer without even trying to understand it. In my opinion, this is a serious regression. A large chunk of people who were trying are no longer trying. This will lead to bad things unless we do something about it.

What Should Candidates Learn from This?

Here’s my advice for junior programmers:

This is the wrong time to become lazy! No company is going to pay you for copy-pasting code from ChatGPT. A small Python script can do that. Your only hope of earning your salary is if you are able to take ChatGPT’s output and fix it.

Understand that ChatGPT makes mistakes and you have to develop strategies to fix those errors.

Experienced and/or smart programmers can see the mistakes in the code just by reading it

Others should learn to make good use of test cases to double-check ChatGPT’s work and find errors in it

Become good at fixing the errors: surprisingly, you can even use ChatGPT for this. If you point out an error to ChatGPT in its own code, it is smart enough to fix it.

I expect the importance of QA will increase. There’s a tendency to look down upon QA (both, among job seekers, and many companies too). Hopefully, this will change.

What Should Companies Do?

Make sure you have a high-power task force looking into your company’s ChatGPT strategy. (I’ve used “ChatGPT” throughout this article but what I really mean is all the new AI technologies.) This task force should have people who have a growth mindset, who have an understanding of technology and business and economics and more1 and who are curious and hands-on enough to try out these technologies personally.

Accept the fact that Generative AI is here to stay and sooner or later everyone will be using it. So banning ChatGPT is not the answer, neither for job candidates nor for employees. If you’re disabling ChatGPT usage due to IP issues, try to resolve the issues as soon as possible: otherwise your competitor who figures out how to use ChatGPT will gain a significant advantage.

Understand that Generative AI makes some people dumber and some people smarter and more productive. While much of this article is focused on the former, there are enough indications that for good/smart/experienced people, effective ChatGPT/Copilot use can significantly improve productivity (50% to 100% is my current understanding and I expect this will continue to improve). Make sure you’re hiring people in the latter category and you’re encouraging your employees to also move into the latter category.

Appendix: The Alien Programs of the ChatGPT Shoggoth

This section contains details of the bizarre errors ChatGPT makes and why I am so disappointed with the candidates submitting these programs. Those who don’t understand programming should skip this.

Consider this programming problem:

For the purposes of this question, we define an xeven number as any sequence of 2 or more consecutive digits that ends in an even digit other than 6. So

32,368,04are xeven numbers but36,2,221are not. Also, for this question,32.34consists of two different xeven numbers,32and34.Write a program that reads a single line and replaces any xeven number with a single 0. Thus, if the input is

"In t=32.34seconds 2 of 16 will increase 11x times"your program should print"In t=0.0seconds 2 of 16 will increase 11x times".

This is not a particularly difficult question but for our fresh engineers—who manage to graduate in spite of never having written any programs during their bachelor’s degree—this is hard.

The kinds of mistakes typical junior programmers make (or used to make until last year) fall in the following categories:

Not handling the “2 or more consecutive digits” requirement

Not handling the “even digit other than 6” requirement

Writing a for-loop with an off-by-one error so that the program tries to read past the end of the input string

An error in the logic due to which the first or last number is not processed correctly

Not realizing that splitting the input on whitespace does not cleanly separate the numbers from non-numbers

Writing monolithic code consisting of two (or sometimes three) nested for-loops with a confusing flow, badly named variables, unnecessary variables and conditions, and code that’s messy enough to guarantee bugs

Lots of syntax errors and spelling mistakes in the code

Until last year, the number of candidates using a regular expression to solve this problem was vanishingly small

Now, suddenly, I am seeing programs that are quite different:

They’re all very well-structured programs with the code cleanly broken out into functions/methods that make sense

The variables and functions/methods all have good names

Almost no syntax errors or spelling mistakes in the code

More than 50% of the programs use regular expressions

The program has such stupid errors in the logic that any human who’s capable of writing programs of this quality would have a 0% chance of making mistakes like these.

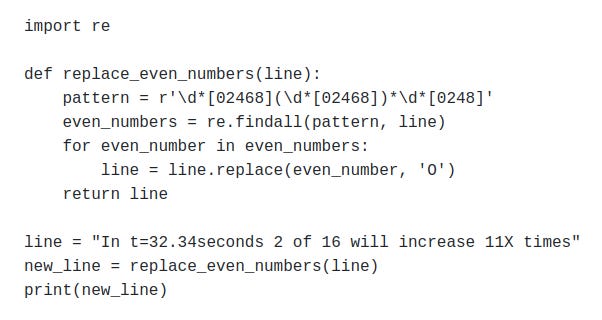

Consider this:

If the regular expression was correct, this would be a nice simple elegant answer. But, what the hell is that regexp? Why is it matching on [02468] and then immediately following it up with a negative lookbehind for `6`?! Any human programmer capable of using a negative lookbehind correctly would also have sense enough to simply use [0248]. And what’s with the \d* at the end? That’s clearly defeating the purpose of this entire exercise. And any human smart enough to use \b would also realize that it does not at all fit the requirements of this question.

The mystery was solved a little bit when I asked ChatGPT to explain this regular expression to me.

From the first line of the explanation, it is clear that ChatGPT thinks this is the correct regexp to solve the question that we had originally asked. And ChatGPT does not realize that the \d* at the end is completely messing up its logic.

But this explanation proves to me that this program came from ChatGPT and not a human programmer.

Here are other bizarre regexps cooked up by ChatGPT:

Here’s an even more bizarre example. This question was a bit different (it asked to find odd numbers but not ending in 5

What are `05` and `15` doing in that regular expression?! The `0` and `1` make absolutely no sense there and I find it hard to imagine a human writing this code. I’m just sad to note that the human did not look at this code and ask WTF?!

There were a bunch of other examples of even more bizarre code which I’m not including here because this post is already too long.

Another thing I noticed was that ChatGPT has about 6 or 7 different (wrong) techniques to solve this problem, so even though all the programs submitted (by the 75% of “definitely ChatGPT” candidates) were different from each other, the techniques and bugs were similar enough for me to be sure of the ChatGPT use.

What Do You Think?

ChatGPT and other Generative AI tools are very new and everything is changing fast. We have no clue what the limits of their capabilities are. In short, nobody knows anything.

It is possible that everything I’ve written in this article will turn out to be wrong in a year.

All we can do is keep ourselves informed about the developments, keep experimenting with various techniques and strategies and keep executing a fast OODA loop to try to ride this wave.

If you have any opinions on this topic, especially if you disagree with anything I’ve said, I would like to discuss those. Please get in touch or leave a comment

And please share this article with people you think should know about this

I expect the importance of Liberal Arts to grow

This is really depressing. Using chat gpt is absolutely fine but really - how much effort does it take to run the program once and see if it’s working?

Very interesting observation. An easy experiment (randomized trial) can be conducted on the platform for causal analysis.